The goal of this activity is to use image processing techniques to extract musical notes from a scanned music sheet and play it in Scilab. I used the music sheet for "Twinkle Twinkle Little Star" as shown in figure 1.

The problem to answer is how to detect the types of notes and the pitch level.

Now, let us go through the steps of the method used. :)

| ||

| Figure 1. Music sheet used in the activity. |

A. Detection of the types of notes.

First, I cropped the image such that all the notes are in the same music lines. Then, I reverted the color since functions in Scilab treat pixels with 0 value as background and 1 as foreground. Figure 2 shows the image.

| Figure 2. Music notes arranged along same lines. |

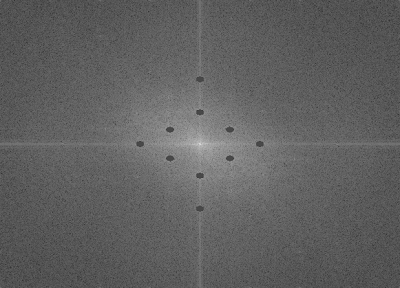

Next, I made a template image for the quarter note, as shown in figure 3. The correlation of the music notes with the quarter note will be taken. This would give an image that will have high pixel values on points where there is high correlation. The resulting image was then converted into a binary image, with a threshold value of 0.6. This step hopes to filter out those with low correlation value. Figure 4 shows the correlation of the quarter note and its thresholded version.

| Figure 3. Template image used to detect quarter notes. |

| Figure 4a. Correlation image of the quarter note to the rest of the notes in the music sheet. |

| Figure 4b. Thresholded version of the correlation. |

Figure 4. Correlation of the quarter note to the rest of the notes

and its thresholded version.

I noticed that at points where there is a quarter note, the blobs are bigger than at points where there are half notes. Well, this is expected since the correlation is higher where there are quarter notes. Instead of completely eliminating blobs for the half notes, I utilized the difference in sizes of the blobs for the quarter and half notes. Using bwlabel(), I gained access to each blob and differentiated them based on their sizes. But the problem with bwlabel() is that its tagging of the blobs is jungled up and not in order. So to fix this, I obtained the x coordinate of each blob. In matrix terms this would be the column where the blob lies. I obtained the first column where the blob is, and using lex_sort() I arranged them in increasing order. Now, I have obtained the note types in the music sheet. :) I gave the half note a value of 0.5s and the quarter note is 0.75s. Note that choosing the appropriate values for the time is very important. Poor choice of time values will alter the melody and the resulting sound will be out of tune.

B. Detection of the pitch level.

The next task is to detect the pitch level. Since the pitch is indicated by the vertical position of the notes on the music sheet, the y position of the blobs will be obtained. In matrix terms, this would be the row on which the blob lies. I obtained the largest value of the row for each blob as the indicator of the pitch. Then, based on the image, I obtained their corresponding pitch. The following shows the range of the row values for each pitch.

C = 40-43

D = 36-39

E = 33-35

F = 31-32

G = 25-28

A = less than 24

From these values, I was able to differentiate the pitch of each notes. I also applied the sorting technique used earlier to arrange the pitch correctly. Now that I have the note types and the pitch levels, the next step is to make Scilab sing. :)

C. Making Scilab "sing"

In Scilab, a sinusoid function was used to make sound. The frequencies used were the corresponding frequencies of the pitch levels. The function soundsec() was used to indicated how long the sound for that particular pitch would be. And using sound(), the melody was played. I used wavwrite() to save the sound file. :)

To answer the question in the manual, set the frequency to zero when adding rests in the sound matrix. Zero frequency means no vibration, therefore there would also be no sound.

This activity is the hardest for me. I thought I'm not going to finish this. But now that I did, it feels so great. hehe. :)

I would like to thank Arvin Mabilangan for sharing his insights about this activity. It really helped a lot. I would give myself a score of 10/10 for successfully making Scilab sing with the correct notes and pitch. :)

Click this link!!! Twinkle Twinkle Little Star

References:

[1] music sheet:

www.mamalisa.com/images/scores/twinkle_twinkle_little_star.jpg

[2] frequencieshttp://www.seventhstring.com/resources/notefrequencies.html

[3] pitch levels

http://library.thinkquest.org/15413/theory/note-reading.htm